The artificial intelligence arms race has a new disruptor—DeepSeek, a Chinese AI startup that has quickly gained traction for its advanced language models.

Positioned as a low-cost alternative to industry giants like OpenAI and Meta, DeepSeek has drawn attention for its rapid growth, affordability, and potential to reshape the AI landscape.

But as the buzz around its capabilities grows, so do concerns about data privacy, cybersecurity, and the implications of feeding personal information into AI tools with uncertain oversight.

What Is DeepSeek, and Why Is It Making Headlines?

DeepSeek’s AI models, including its latest version, DeepSeek-V3, claim to rival the most sophisticated AI systems developed in the U.S.—but at a fraction of the cost.

According to reports, training its latest model required just $6 million in computing power, compared to the billions spent by its American counterparts. This affordability has allowed DeepSeek to climb the ranks, with its AI assistant even surpassing ChatGPT as the top free app on Apple’s U.S. App Store.

What makes DeepSeek’s rise even more surprising is how abruptly it entered the AI race. The company originally launched as a hedge fund before pivoting to artificial intelligence—an unusual shift that has fueled speculation about how it managed to develop such advanced models so quickly. Unlike other AI startups that spent years in research and development, DeepSeek seemed to emerge overnight with capabilities on par with OpenAI and Meta.

However, DeepSeek’s meteoric rise has sparked skepticism. Some analysts and AI experts question whether its success is truly due to breakthrough efficiency or if it has leveraged external resources—potentially including restricted U.S. AI technology. OpenAI has even accused DeepSeek of improperly using its proprietary tech, a claim that, if proven, could have major legal and ethical ramifications.

Why Consumers Should Be Cautious

One of the biggest concerns surrounding DeepSeek isn’t just how it handles user data—it’s that it reportedly failed to secure it altogether.

According to The Register, security researchers at Wiz discovered that DeepSeek left a database completely exposed, with no password protection, allowing public access to millions of chat logs, API keys, backend data, and operational details.

This means that conversations with DeepSeek’s chatbot, including potentially sensitive information, were openly available to anyone on the internet. Worse still, the exposure reportedly could have allowed attackers to escalate privileges and gain deeper access into DeepSeek’s infrastructure. While the issue has since been fixed, the incident highlights a glaring oversight: even the most advanced AI models are only as trustworthy as the security behind them.

Here’s why caution is warranted:

- Data Privacy Risks: AI chatbots process and store conversations, which may be used for further training, sold to third parties, or accessed by unauthorized entities. It remains unclear how DeepSeek handles user data or whether its security protocols align with global privacy standards.

- Regulatory Uncertainty: Unlike U.S. companies that must comply with laws like the California Consumer Privacy Act (CCPA) and the European Union’s General Data Protection Regulation (GDPR), DeepSeek operates under different legal frameworks. This lack of regulatory clarity could mean weaker protections for user data.

- Potential Cybersecurity Threats: History has shown that AI tools can be manipulated for malicious purposes, from deepfake scams to social engineering attacks. If DeepSeek’s security measures are not robust, it could become a target for cybercriminals looking to exploit vulnerabilities.

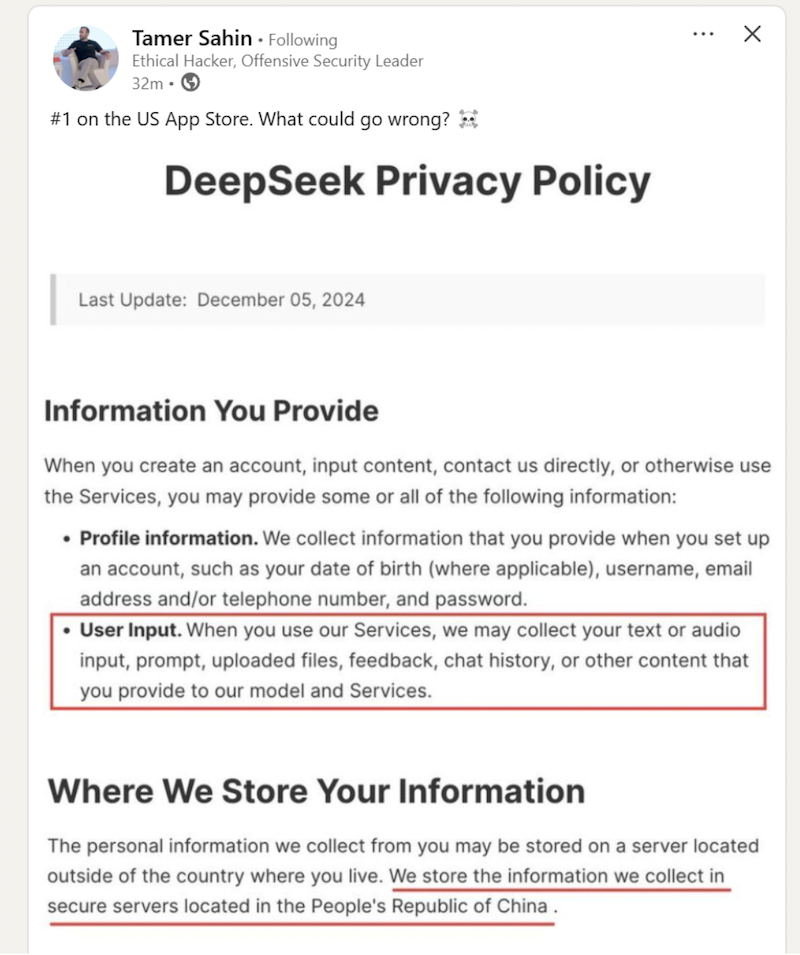

DeepSeek specifically states in its terms of service that it collects, stores, and has permission to share just about all the data you provide while using the service.

Figure 1. Screenshot of DeepSeek Privacy Policy shared on LinkedIn

It specifically notes collecting your profile information, credit card details, and any files or data shared in chats. What’s more, that data isn’t stored in the United States, which has strict data privacy regulations. DeepSeek is a Chinese company with limited required protections for U.S. consumers and their personal data.

How to Stay Safe When Using AI Chatbots

If you’re using AI tools—whether it’s ChatGPT, DeepSeek, or any other chatbot—it’s crucial to take steps to protect your information:

- Avoid sharing personal or sensitive data. AI chatbots are not secure vaults—treat them like public forums. You wouldn’t post your social security number or passwords to Facebook, don’t share those details with chatbots either.

- Review privacy policies carefully. Before using a new AI model, check how your data is collected, stored, and used. Read privacy policies and consider what data is being saved.

- Use disposable or temporary email addresses. If a chatbot requires registration, consider using an alias to prevent your primary email from being linked to the service.

- Enable multi-factor authentication. If an AI platform offers account security features, enable them to add an extra layer of protection.

As AI chatbots like DeepSeek gain popularity, safeguarding your personal data is more critical than ever. With McAfee’s advanced security solutions, including identity protection and AI-powered threat detection, you can browse, chat, and interact online with greater confidence—because in the age of AI, privacy is power.